I was working on some ESXi upgrades recently. We’re currently preparing everything to make the upgrade to vSphere 7 somewhen smooth as silk. That means that we’re rolling out vSphere 6.7 on all of our systems. Recently, I was tasked to upgrade some hosts in a facility some hundred miles away. The task itself was super easy, managing that with vSphere Update Manager was working like a charm. But before the vSphere upgrade, I had to upgrade the BIOS and server firmware to make sure that we’re fine with the VMware HCL.

The second host was done within one hour and received the complete care package. But the first host took a bit longer due to unforeseen troubleshooting. I’d like to share some helpful tips (hopefully they’re helpful).

What happened?

As mentioned, upgrading the ESXi host through the vSphere Update Manager worked like a charm. But before that, I booted the server remotely with the Service Pack for ProLiant ISO image to upgrade the BIOS and firmware of that server. Also, that went very well and. As there are two ESXi hosts at this location, we had shared storage available and we were able to move the VMs from one host to the other without further issues. One host placed into maintenance mode, upgrade, remove from maintenance mode, and the same for the second server. That was the idea.

But unfortunately, the gods of IT had something different in mind. After upgrading the first host, we tried to move the VMs back to this host to prepare the upgrade for the second host. Well, some VMs were able to be moved there, some were not. But why?

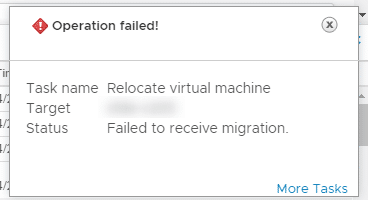

When we move some particular VMs back from the second to the upgraded host, we received the below error:

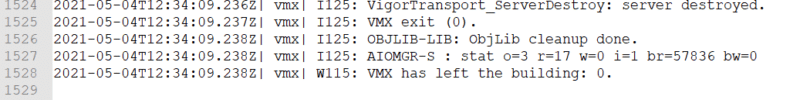

That made us curious. Why did this happen? When checking the tasks and events of that host and also the affected VM in vCenter, we didn’t find much information. After some internet research, we’ve found some possible causes. But not all of them didn’t fit our issue. So we had to dig a bit deeper. Thanks to the vmware.log file, located in the VM folder, we were able to find out the following:

Ok, that sounds funny that VMX has left the building, but not sure why. Seems to be a boring party…

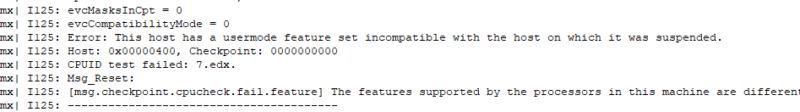

Some more digging brought some more helpful information:

Obviously, the vMotion failed because some CPU features are different between the first (the already updated) and the second host. But wait, the two servers are the same model, with the same hardware configuration? How can that be?

The solution

That led us to the conclusion that it must be something with the VM compatibility level. But wait again, some movable VMs were on VM HW version 8, and the VMs with failed vMotion were also on VM HW version 8? Well, to say it here, we weren’t able to find the exact differences here. But that led us to two solutions. Either upgrade the VM HW version or install an ESXi patch. We decided to install the patch as we didn’t want to reboot some VMs (but we did it later).

And before you complain now, yes, I’m aware of the fact that the patch in the linked KB article is not the most recent ESXi build. It’s somewhat historical. When we started with the global vSphere 6.7 rollout, vSphere 6.7 Update 2 was the latest version available. And yes, we’re currently again planning new rollouts, as it was sadly neglected in the past. But you know, time and human resources, and change requests…